AutoComplete or AutoSuggest has in recent years become a “must-have” search feature. Solr can do AutoComplete in a number of ways (such as Suggester, TermsComponent and Faceting using facet.prefix), but in this post we’ll consider a more advanced and flexible option, namely querying a dedicated Solr Core search index for the suggestions. You may think that this sounds heavy weight, but we’re talking small data here so it is really efficient and snappy!

Even if it’s some work setting up, the benefits to this approach are really compelling:

- Suggest on multi word or sentences

- Suggests on prefix of whole line and/or individual words

- Full relevancy tuning capabilities of Solr (in contrast to a single frequency sorting from TermsComponent or Faceting)

- Increased recall using Phonetics, Fuzzy, Character normalization, or any other Solr feature

- Rich suggestions including thumbnails, extra texts etc

- Easy to mix different “categories” of suggestsions, e.g. “Book titles”, “Authors”, “Genres”, and group these together

- Much more

So let’s get to it. To make it easy, we’ve shared with you a ready to run example setup at https://github.com/cominvent/autocomplete which provides a template for you to build upon for your own needs. First we’ll guide you through downloading and running the example, indexing some example data with names of all countries and all major cities in the world. Then we’ll look more behind the scenes to explain how it’s all setup.

Running the example

The example contains a complete solr-home configured with an “ac” core, and some example data in CSV format which we’ll feed to our core using HTTP POST (curl). Solr itself is not included in the example, so you’ll need to download Solr 3.5or newer first. We’ll then start the Solr example app, pointing to our autocomplete solr-home.

- Download and unpack Solr3.5 if you have not already http://www.apache.org/dyn/closer.cgi/lucene/solr/

- Download and unpack autocomplete example from GitHub: https://github.com/cominvent/autocomplete/zipball/master (alternatively check out the code using git)

- Cd to the autocomplete folder, open README.TXT and follow the instructions. When done you will have Solr up and running with the example data indexed into the “ac” core.

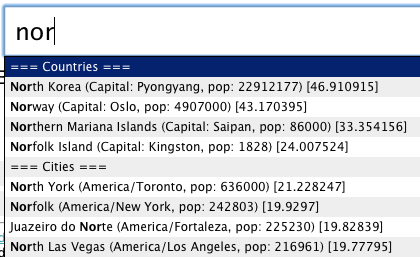

- When you browse to http://localhost:8983/solr/ac/browse and start typing, you’ll see countries and cities suggested

Behind the scenes

- JavaScript hook (in velocity/head.vm) which performs a query to Solr whenever you type a character in the search box

- A velocity template (velocity/suggest.vm), adapted from Solr’s velocity contrib, which reformats the Solr response the way we want it

- jQuery AutoComplete plugin which renders the results output from suggest.vm

- The Solr core configuration itself as defined by schema.xml and solrconfig.xml

- The example data which is indexed into the “ac” core

1. Querying Solr from the page

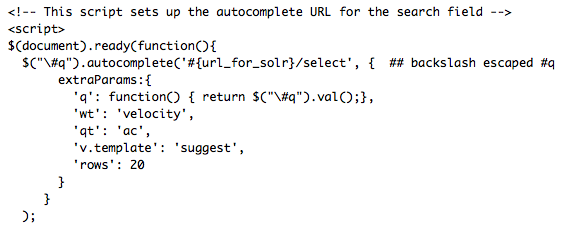

This Javascript makes prepares the URL to query. Here we ask Solr directly, but normally you’ll want to call some (PHP/JSP/ASPX) servlet of yours which in turn queries the private Solr server. So put the URL to your servlet. Your servlet will then call the autocomplete core similar to what we do here. We call the normal “/select” handler, but with qt=ac we select the handler we want, and instead of XML back we ask for a velocity template called “suggest” (&wt=velocity&v.template=suggest), which formats one suggestion per line. Finally we ask for 20 results.

2. Format the response to suit your Ajax component

In our example we use suggest.vm to format the results to make it suitable for our AutoComplete component. You would probably want to do this formatting in your client code instead. Our simple jQuery plugin expects one suggestion text per line. A more advanced Ajax component may be able to take in multiple pieces of information, such as thumbnail URLs etc.

3. The Autocomplete component

We use jQuery autocomplete component, and it eats the response from suggest.vm one line at a time and displays it in a nice (ehem) dropdown list.

4. Solr schema and config

Now the juice of our solution lies in the AC core itself. It has a schema which matches the input data, and this should be a good starting point for you too. Apart from the fields we return, we also have some special fields which are only searched. These are textnge, textng, textphon, extrasearch and phonetic. They are copied from textsuggest, but have different processing, which can be seen from the fieldTypes:

textnge uses fieldType autocomplete_edge which will match only from the left edge of the suggestion text. For this we use KeywordTokenizerFactory and EdgeNGramFilterFactory along with some regex cleansing.

textng uses fieldType autocomplete_ngram which matches from the start of every word, so that you can get right-truncated suggestions for any word in the text, not only from the first word. The main difference from textnge is the tokenizer which is StandardTokenizerFactory, thus the EdgeNGram filter will produce N-grams for every single token.

textphon and phonetic uses fieldType text_phonetic_do which applies the DoubleMetaphoneFilterFactory phonetic filter. This lets a query for “muhammed” also match “mohammad” etc. Note that it does not make sense to combine N-gram and phonetics, so we only compute phonetic normalization per whole word. The difference between the two fields is how they get populated – textphon gets populated with a copyField from textsuggest, while “phonetic” must be explicitly fed in the content. This is to let you choose whether phonetics should apply to all suggestions or just some.

Finally, the field extrasearch is simply queried in addition to textsuggest, and is a way to match hidden text that is not displayed. In our example we add country codes to this field so you can get a match for “Sweden” by typing “SE”. This field could also contain your hand-edited list of synonyms.

solrconfig.xml

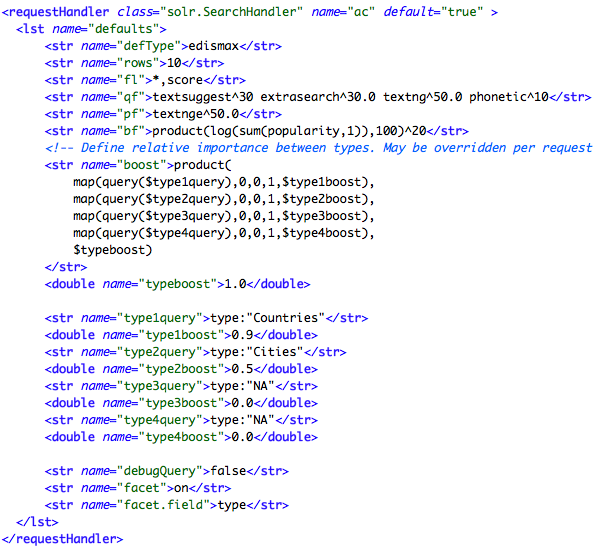

In our solrconfig we have setup two request handler configs, one for our “/browse” interface, for testing, and one “ac” without the Velocity stuff. In real life we would not have a “/browse” section because your main search index would reside in another core and probably contain other data than the autoComplete data. In our search GUI, if you click the search button you will get a normal search result directly from the “ac” core, so don’t be confused by that 🙂 Let’s look at the config:

We use edismax query parser, and set it up with “qf=textsuggest^30 extrasearch^30.0 textng^50.0 phonetic^10” and pf=textnge^50.0. So the most important field is textng, and we weigh down the phonetic results to avoid noise. If you want to always search phonetic, add textphon to qf. The textnge field is searched through pf parameter (PhraseField), to give a boost to exact suggestions matching from beginning of query.

In addition to textual relevance we boost (bf) by popularity (in our case population), and we also do a multiplicative boost (boost) formula to be able to say that countries type entries should be more frequently suggested than the ones with type=Cities. This lets you tune the balance between the various types in a dynamic way without reindexing. You can even change a weight with a URL parameter instead of editing solrconfig.xml.

5. The example data

The example data file ac-example.csv is a comma separated file (which you may open in your spreadsheet application if you wish to view it more readable). Its columns match our schema, and you can easily use it as a template for entering you own autosuggest data. To feed the file to Solr you may use any HTTP client you choose, like curl as being done by feed-ac.sh referenced in README.TXT. This concludes the walkthrough. Comments welcome!

Update September 2014

The smart guys at mappy.com have extended this example to include a GEOspatial component to the ranking [2].

References

[1]: Lucid’s blog post about AutoComplete using edgeNgram

[2]: Mappy.com tech blog

Miles Wortho

Adrian Höhn